Introduction

Noel Burton-Krahn

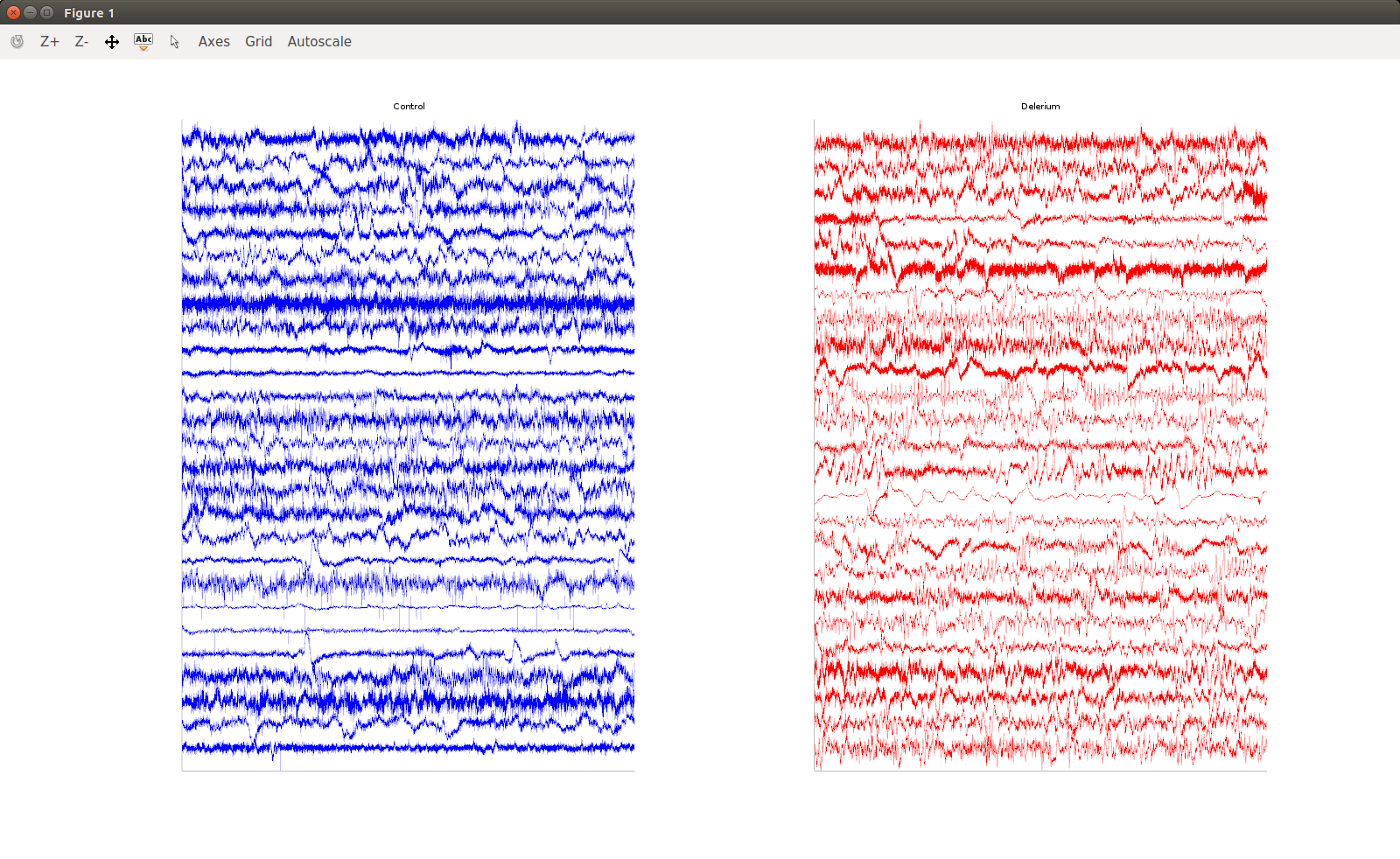

Delirium Detection in EEGs

Got baseStudyEDF: control and delerium EEGs Total Control: 27 Total Delirium: 25

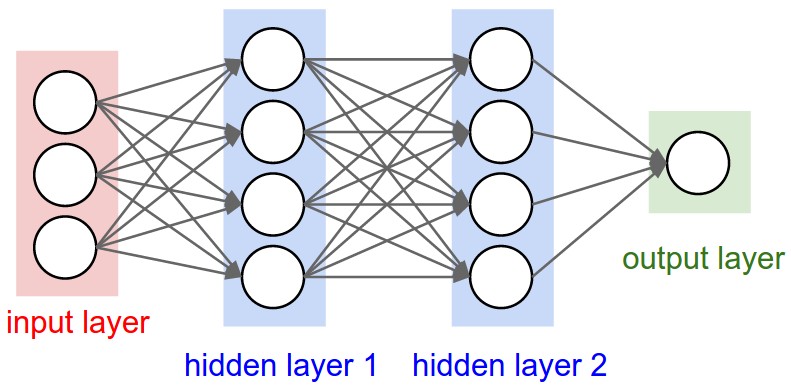

Neural Networks

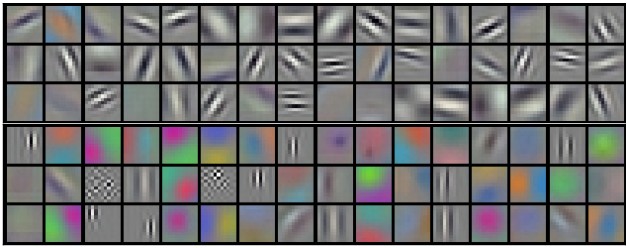

Images from CS231n Convolutional Neural Networks for Visual Recognition

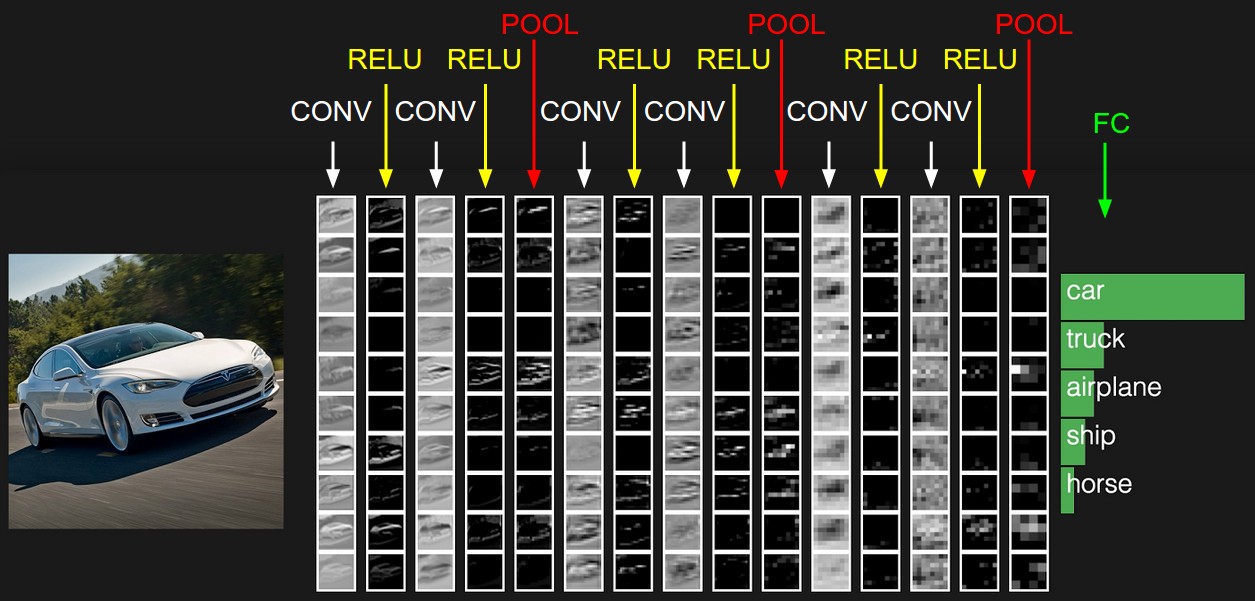

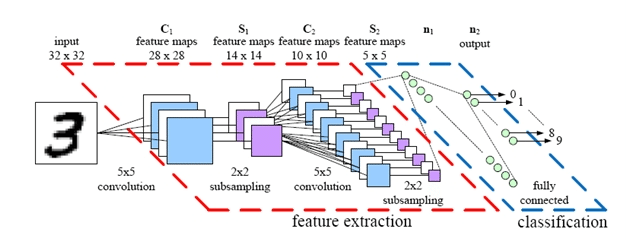

Convolutional Networks

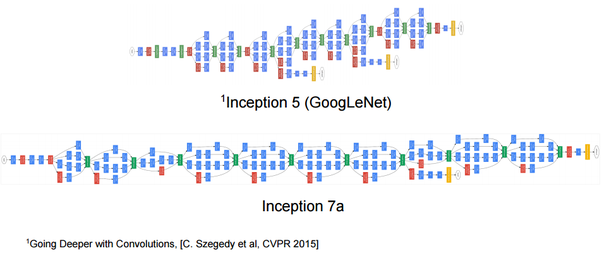

Deep Neural Networks

Demo Digit Classifier

2D Visualization of a CNN by A Harley, Ryerson

https://vimeo.com/125940125

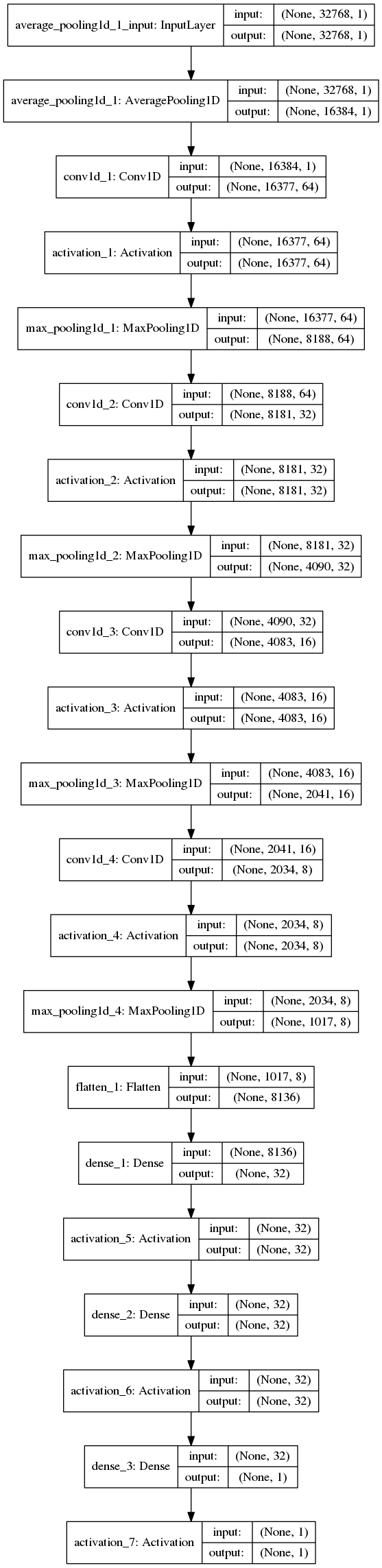

A CNN for Delirium Detection

Made 4CONV + 2FC CNN: avg*2, 64x8, 32x8, 16x8, 8x4, 64, 64, 1

in Python's Keras

Input(inputs.shape[1:])

AveragePooling1D(pool_size=2)

Conv1D(64, 8, activation='relu')

MaxPooling1D()

Conv1D(32, 8, activation='relu')

MaxPooling1D()

Conv1D(16, 8, activation='relu')

MaxPooling1D()

Conv1D(2, 8, activation='relu')

MaxPooling1D(8)

Flatten()

Dense(64, activation='sigmoid',

kernel_regularizer=regularizers.l2(0.01),

activity_regularizer=regularizers.l1(0.01),

)

Dense(64, activation='sigmoid')

Dense(1, activation='sigmoid')

Training

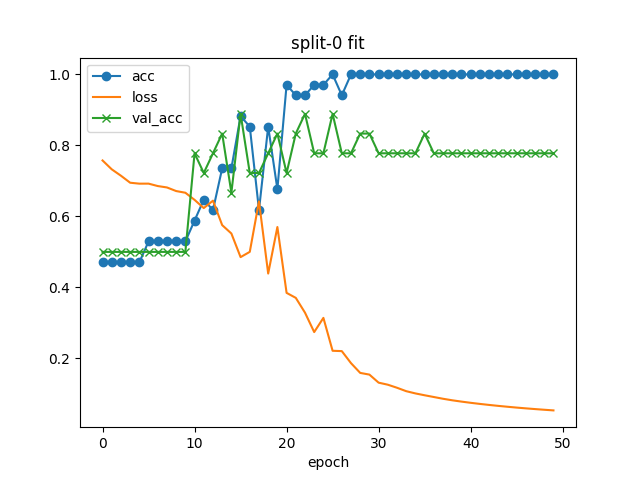

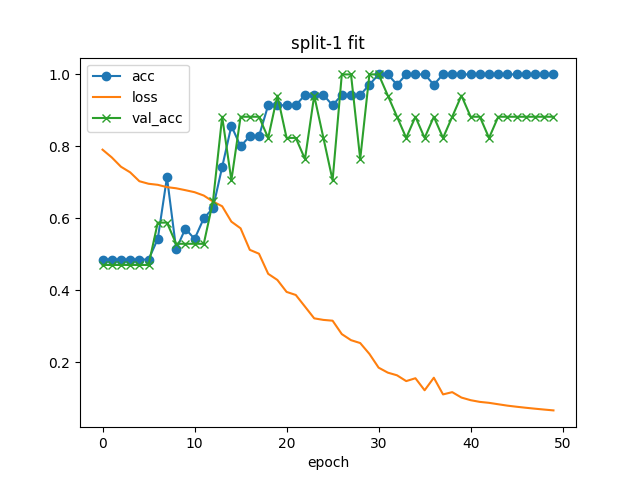

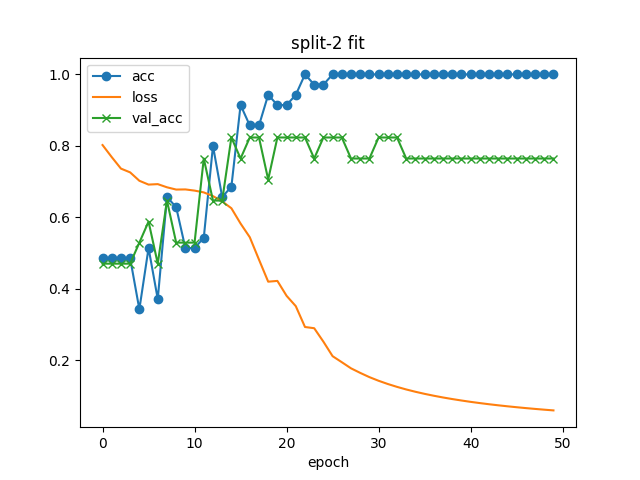

Trained on 3 splits: train on 66% of data, evaluate on 33% untrained data. Average accuracy: (94 + 100 + 82)/3 = 92%

The training graphs demonstrate:

-

92% accuracy on untrained validation data is pretty good

-

The training dataset is too small. When the training accuracy goes to 100%, the net is overfitted to the data.

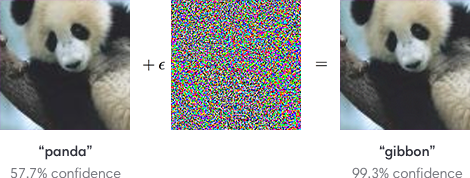

CNNs are easily Fooled

Attacking Machine Learning with Adversarial Examples

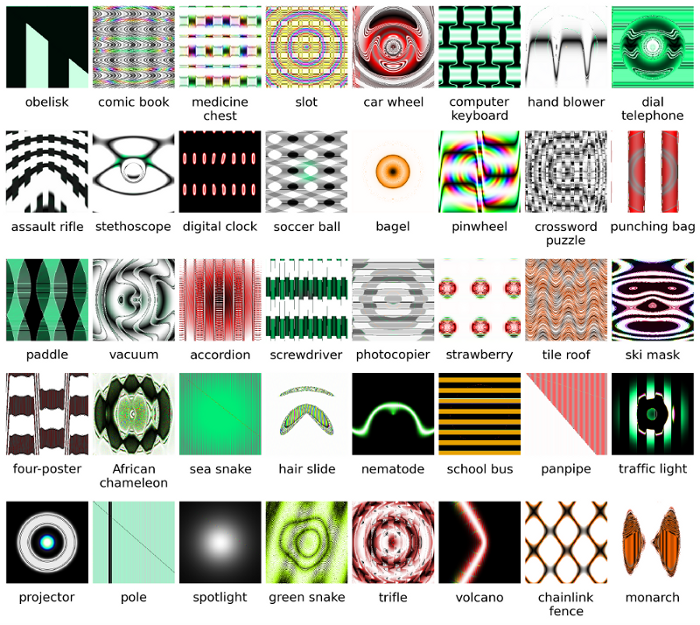

Deep Neural Network are Easily Fooled: High Confidence Predictions for Unrecognizable Images

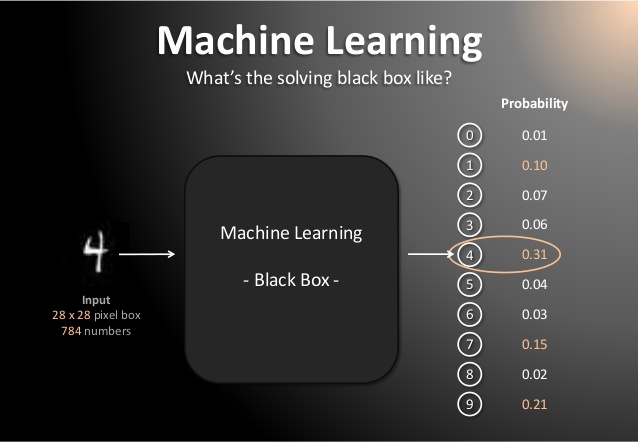

The Black Box Problem

Medical device approval requires justification.

FDA Approval is Possible

Despite the challenges, devices based on neural networks have recently been granted FDA approval, and there are many startups emerging in this space.

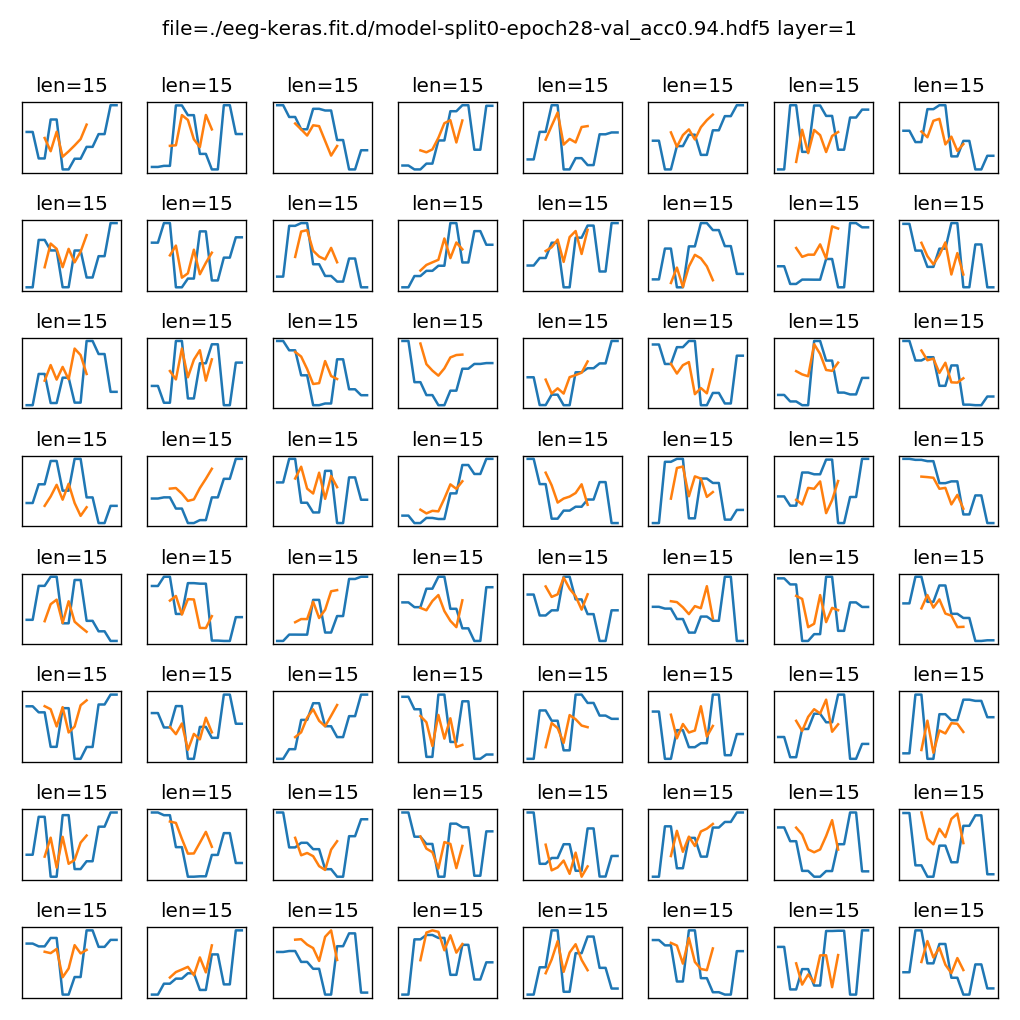

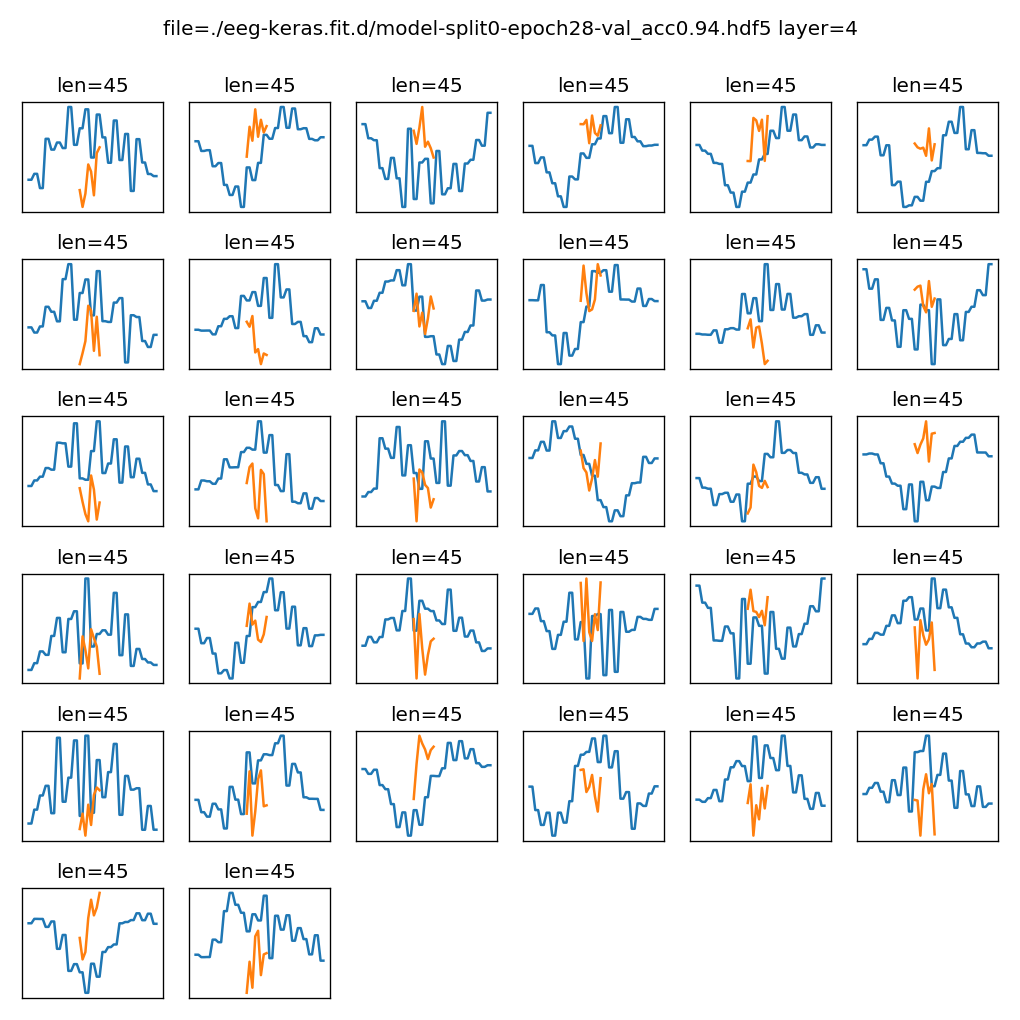

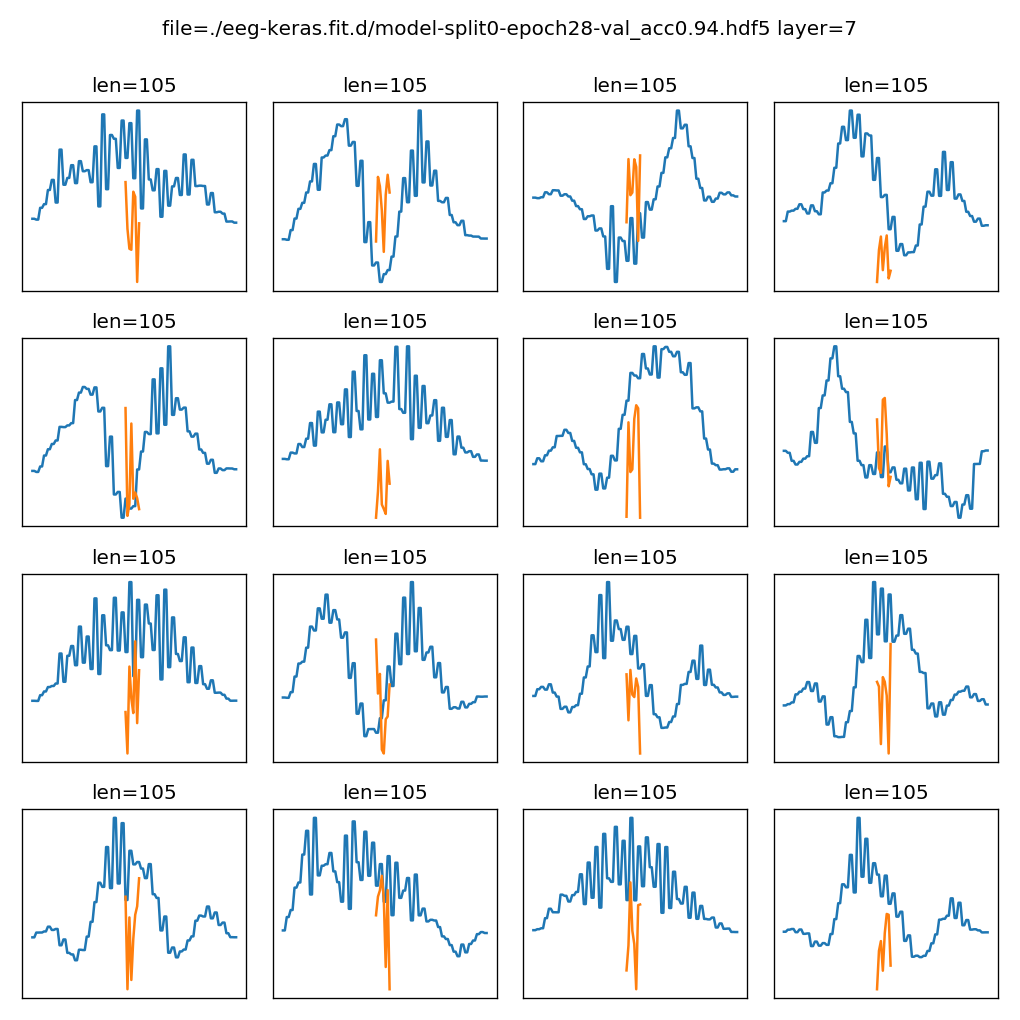

CNN Analysis

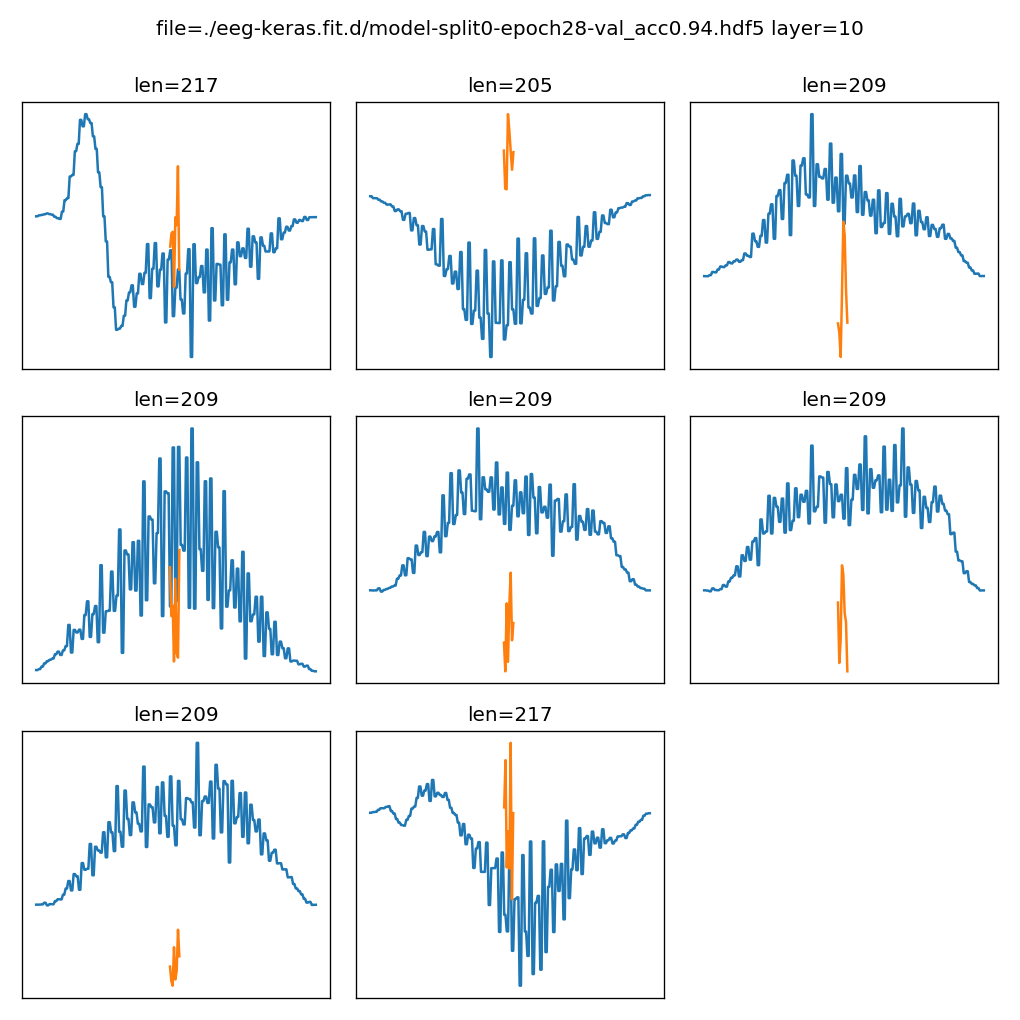

Did deeper analysis of the CNN convolution filters. Convolution filters are similar to wavelets in that they perform a multiscale correlation of the input signal with learned feature fragments. Lower layers convolve with the outputs of upper layers to match larger scale features.

These graphs show the input feature that maximizes neuron excitment for each convolution filtter at each level.

The final correlation layer's output is passed through a fully-connected network for final classification.

Other ways of looking at EEGS

Frequency spectrogram

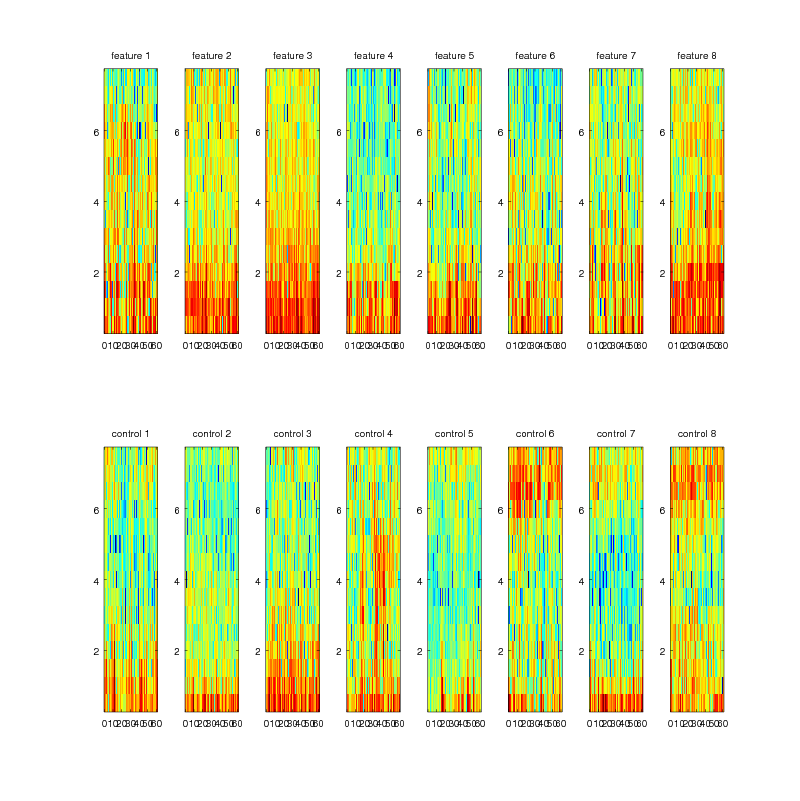

Spectrograms of 8 delirium and 8 control EEGs

Wavelet transform

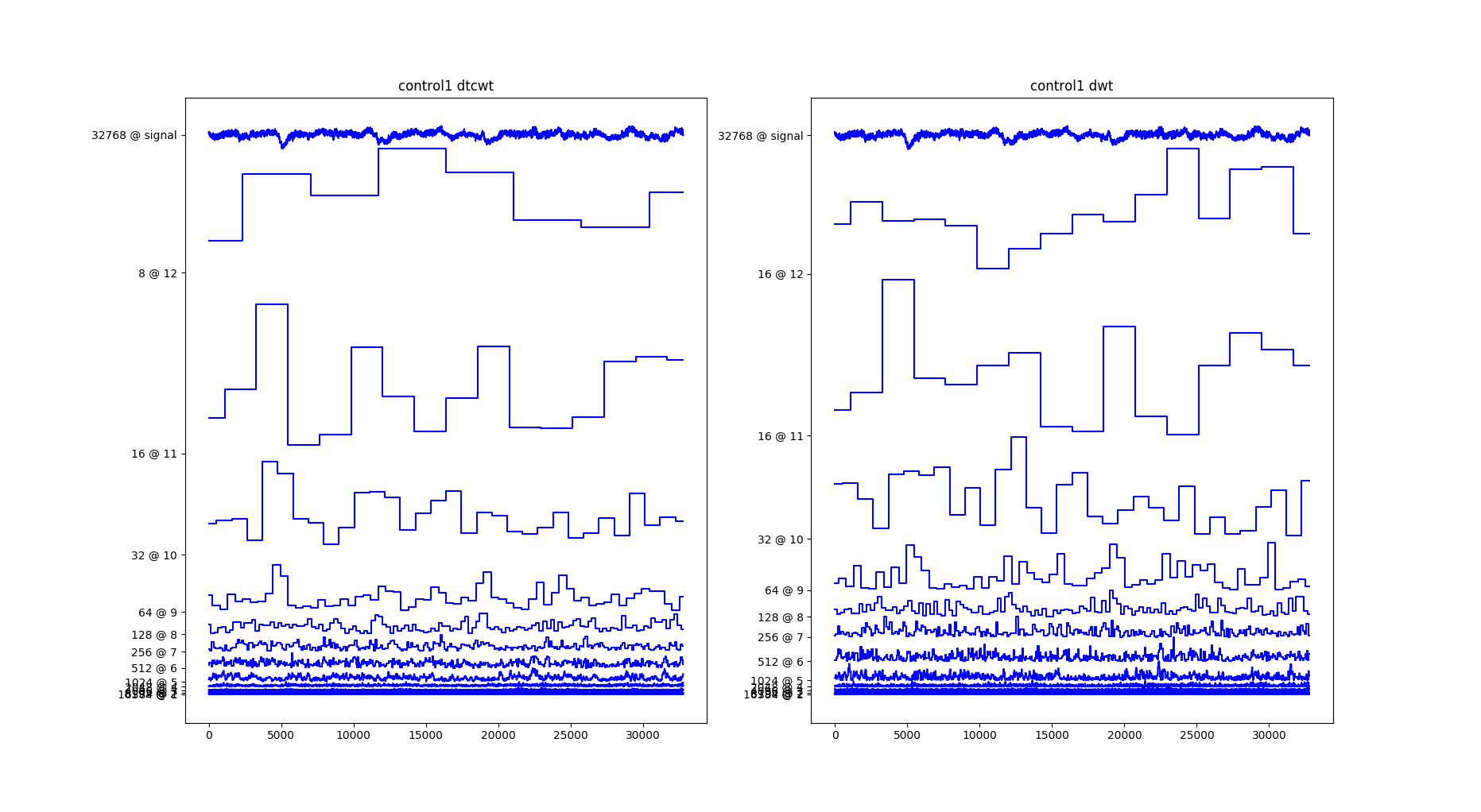

Problem: The DWT is shift-variant

Solution? The The Dual-Tree Complex Wavelet Transform is shift-invariant

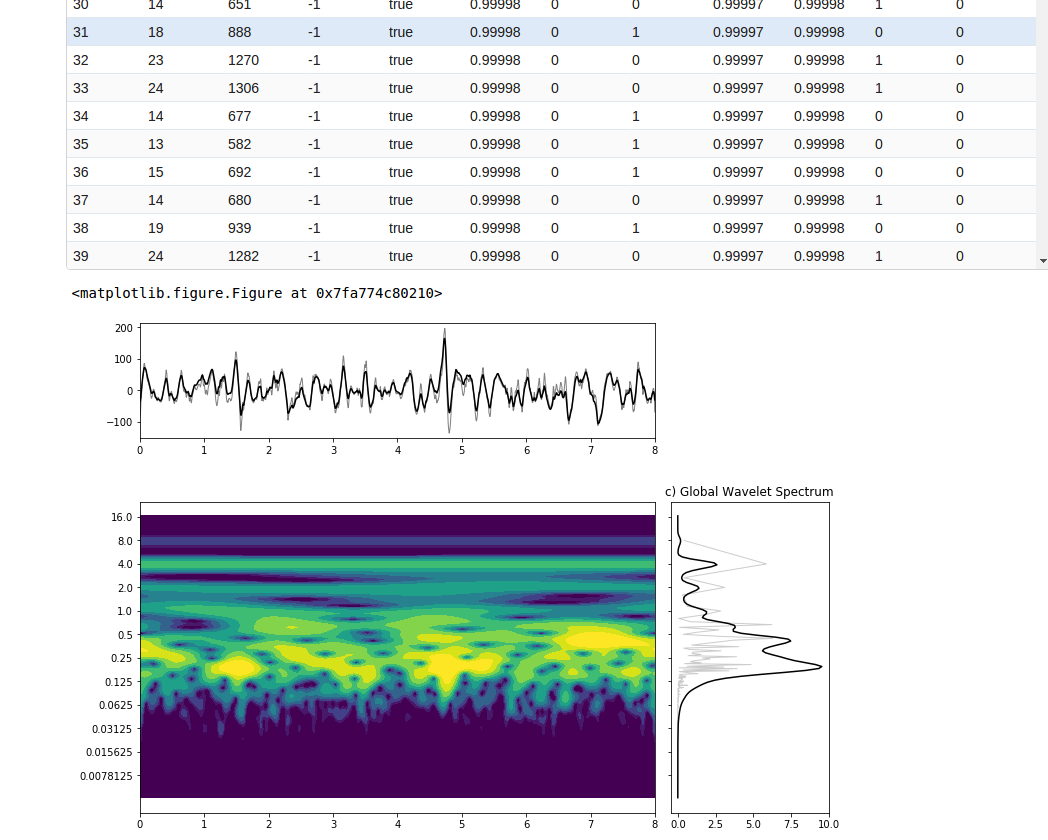

Here's the DTCWT vs the DWT for a control EEG

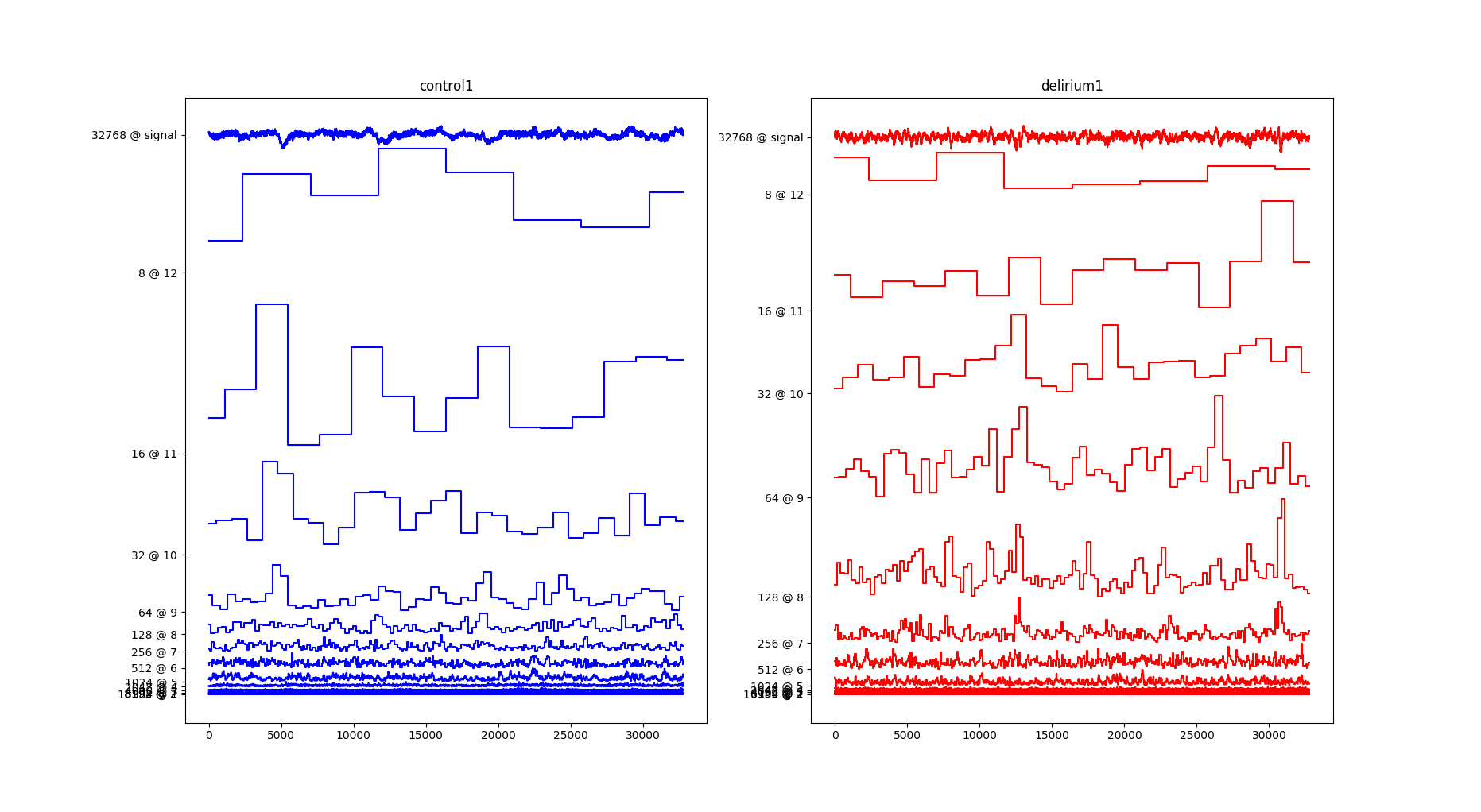

And the DTCWT for a control vs delirium EEG

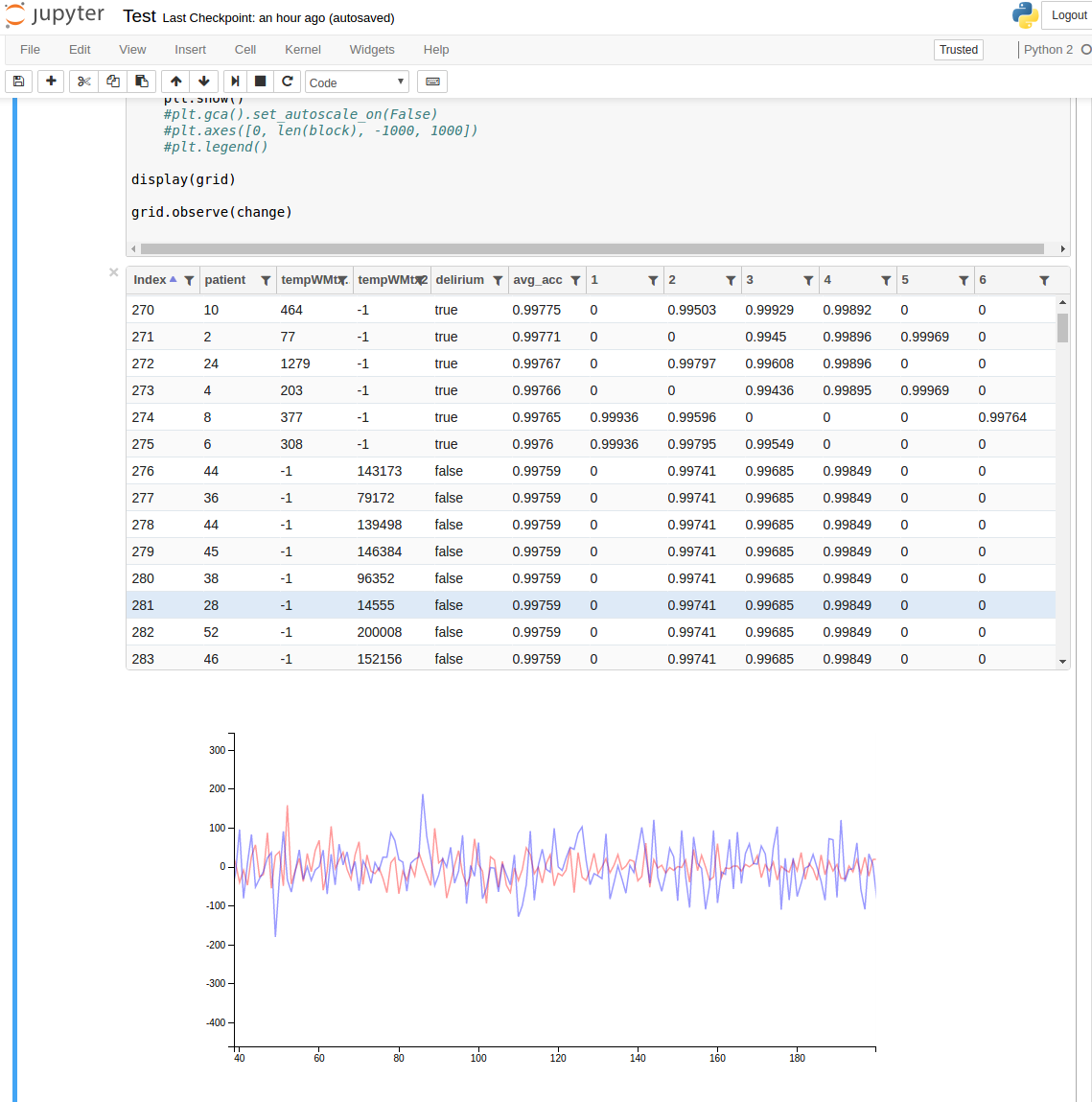

Examining 8sec DWT samples grouped by patient, ordered by validate_acc during training. Each 8sec dwt block was used as a validation point in 3 keras training runs, and sorted by average accuracy. The results were tabulated in a Jupyter notebook which could overlay blocks interacively for visual comparison.

The most consistently accurate delirium blocks appeared to have high frequency oscillations around coeffs 90-128. Also sometimes high amplitudes in the 1-64 coeff range.

The most consistently accurate control blocks has low frequency oscillations around coeffs 90-128, and low amplitudes overall.

There were exceptions to these rules that were nevertheless consistently accurate.

Continuous wavelet transform of 8sec delirium eeg

From noel/classifiers/CWTTest.ipynb

Trying CNN on Wavelet transform

Goal: to find a wavelet basis that isolates the delirium features.

Found: dtcwt scales 6-8, (8Hz, 4Hz, 2Hz, 896 points per sample, from 32k), fc16, fc16 nnet: 98.1%

Conclusions

-

Deep networks can produce high accuracy

-

But they can be fooled, and the error resonse is unknown

-

They need to be fully explained to satisfy regulatory requirements

Future Work

-

Maybe a CNN with smaller FC layers would be easier: just convolutions, no fully-connected layers?

-

Try in wavelet domain to reduce convolutional layers

-

Try genetic algorithm for optimizing layers

Comments

comments powered by Disqus